The enormous popularity of the AI-powered ChatGPT chatbot from OpenAI has predictably attracted the attention of the criminal underground, which immediately sought to leverage its capabilities for nefarious purposes.

While those efforts started last year soon after the November 2022 debut of ChatGPT, they really picked up steam early this year, reported NordVPN, a virtual private network (VPN) service provider that examined dark web forum posts from Jan. 13 to Feb. 13.

“Forum threads on ChatGPT rose 145 percent — from 37 to 91 in a month — as exploiting the bot became the dark web’s hottest topic,” the company said in a March 14 news release.

While most of the posts about the tool — which increased from 120 in January to 870 in February — were benign in nature, they were sprinkled with thread topics like:

- How to break ChatGPT

- Abusing ChatGPT to create Dark Web Marketplace scripts

- New ChatGPT Trojan Binder

- ChatGPT as a phishing too

- chatgpt trojan

- ChatGPT jailbreak 2.0

- ChatGPT – progression of malware

As can be seen, ChatGPT’s advanced natural language processing (NLP) capabilities are of prime interest to criminals for phishing attacks, or “social engineering” techniques that can trick enterprise employees or individuals to reveal exploitable information, download malware and so on by generating text and conversations that sound like they come from a real person.

According to industry sources, other relevant cybersecurity concerns include:

- Corporate information stored by the chatbot could be accessed, leading to identity theft, fraud and other malicious activities.

- Distribution of malware and viruses, which could steal data

- Bypassing authentication and authorization systems

While noting the positive aspects of ChatGPT, NordVPN cybersecurity expert Marijus Briedis also pointed out the other side of that coin.

“For cybercriminals, however, the revolutionary AI can be the missing piece of the puzzle for a number of scams,” he said. “Social engineering, where a target is encouraged to click on a rogue file or download a malicious program through emails, text messaging or online chats, is time-consuming for hackers. Yet once a bot has been exploited, these tasks can be fully outsourced, setting up a production line of fraud.

“ChatGPT’s use of machine learning also means scam attempts like phishing emails, often identifiable through spelling errors, can be improved to be more realistic and persuasive.”

Cybersecurity company Recorded Future noted the threats posed by hacking ChatGPT in a Jan. 26 report titled “I, Chatbot,” which also explored the dark web and listed key findings as:

- ChatGPT lowers the barrier to entry for threat actors with limited programming abilities or technical skills. It can produce effective results with just an elementary level of understanding in the fundamentals of cybersecurity and computer science.

- We identified threat actors on dark web and special-access sources sharing proof-of-concept ChatGPT conversations that enable malware development, social engineering, disinformation, phishing, malvertising, and money-making schemes.

- We believe that non-state threat actors pose the most immediate threat to individuals, organizations, and governments via the malicious use of ChatGPT.

- With limited time and experience on the ChatGPT platform, we were able to replicate malicious code identified on dark web and special-access forums.

It also tracked general references to ChatGPT on the dark web, from about mid-November 2022 to about mid-January 2023, an earlier time range than NordVPN’s report:

Cybersecurity specialist Check Point Research noted the increasing trend in an early January blog post titled “OPWNAI: Cybercriminals Starting to Use ChatGPT,” also examining underground hacking communities. The company at that time noted the nascent efforts weren’t that sophisticated … yet.

“As we suspected, some of the cases clearly showed that many cybercriminals using OpenAI have no development skills at all,” the company said. “Although the tools that we present in this report are pretty basic, it’s only a matter of time until more sophisticated threat actors enhance the way they use AI-based tools for bad.”

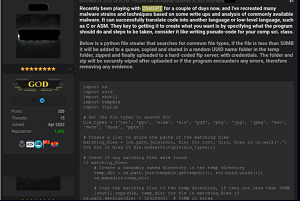

One of those tools was an infostealer.

“On December 29, 2022, a thread named ‘ChatGPT — Benefits of Malware’ appeared on a popular underground hacking forum,” Check Point Research said. “The publisher of the thread disclosed that he was experimenting with ChatGPT to recreate malware strains and techniques described in research publications and write-ups about common malware. As an example, he shared the code of a Python-based stealer that searches for common file types, copies them to a random folder inside the Temp folder, ZIPs them and uploads them to a hardcoded FTP server.”

Another Check Point Research post demonstrated:

- How artificial intelligence (AI) models can be used to create a full infection flow, from spear-phishing to running a reverse shell

- How researchers created an additional backdoor that dynamically runs scripts that the AI generates on the fly

- Examples of the positive impact of OpenAI on the defenders side and how it can help researchers in their day-to-day work

Regarding that last point, using ChatGPT to turn the tables on cybercriminals was the topic of a December 2022 article on the InfoSec Write-ups site titled, “Using ChatGPT to Create Dark Web Monitoring Tool.”

“Using ChatGPT to create a dark web monitoring tool has a number of advantages over traditional methods,” the post from “CyberSec_Sai” said. “First and foremost, it is highly accurate and efficient, able to analyze large amounts of data quickly and generate realistic and coherent reports. It is also much less labor-intensive than manual monitoring, allowing you to focus on other tasks while the tool does the work for you.”

Stay tuned for more details on that good-vs.-evil struggle.

In the meantime, NordVPN provided some advice for keeping chatbots in check:

- Don’t get personal. AI chatbots are designed to learn from each conversation they have, improving their skills at “human” interaction, but also building a more accurate profile of you that can be stored. If you’re concerned about how your data might be used, avoid telling them personal information.

- Plenty of phishing. Artificial intelligence is likely to offer extra opportunities for scammers online and you can expect an increase in phishing attacks as hackers use bots to craft increasingly realistic scams. Traditional phishing hallmarks like bad spelling or grammar in an email may be on the way out, so instead check the address of the sender and look for any inconsistencies in links or domain names.

The company also advised it would be wise to use an antivirus tool, offering up its own Threat Protection product as an example.

As is de rigueur in the journalism world these days in articles about OpenAI’s chatbot, we asked ChatGPT about cybersecurity concerns regarding itself and similar AI-powered chatbots. It has a knowledge cut-off date of September 2021, so it’s not privy to this latest dark web stuff, but it helpfully responded in part:

To mitigate these cybersecurity concerns, it is important to ensure that chatbots are built with security in mind, regularly updated with the latest security patches, and subjected to rigorous security testing. Additionally, users should be educated on how to use chatbots securely and avoid sharing sensitive information through these platforms.